Can I circumvent running out of RAM during a simulation study?

Can I circumvent running out of RAM during a simulation study?

|

Dear all,

I'm working on a simulation study but SPSS seems unable to handle the amount of data involved. I'm basically simulating data, running tests and capturing the output with OMS. After running all tests, a new dataset with results is generated, edited and saved. Things ran fine for 100 iterations per condition. I first saved the raw, unedited data (immediately after OMSEND) and it turned out to be a 67MB .sav file. I kinda expected that for 1,000 iterations, I'd end up with some 670MB of data but SPSS crashed. When it did, it was consuming 4GB of RAM (I'm on Windows 8.1 with 6GB RAM). I then realized that when you save a dataset as a .sav file, it gets compressed by default. Since the dataset generated by OMS contains huge (redundant) string constants, my current guess is that the file compression accounts for the difference between the 670MB .sav file I was expecting and the 4GB RAM SPSS was consuming. The first thing that came to mind is to modify the OMS command and have it generate a .sav file instead of a dataset. Perhaps in this case it will actually generate the expected 670MB data file. However, these data are generated by one single OMS specification so SPSS may need to hold all captured output in memory anyway until it can write the compressed .sav file. Or does it write the outfile "on the fly"? And even if the .sav file can be generated, will it decompress upon opening it? And if so, could a 670MB file become too large for my 6 RAM system? In short, does anybody have an idea how these things work? And more importantly, does anybody have an idea how to get the 1,000 iterations job done on my current system anyway? The reason I'm not simply trying to have OMS write a .sav file is that the syntax runs for some 5 hours during which it completely hijacks my system. I though it would be a better idea to first inform whether there's even a chance of this succeeding. |

Re: Can I circumvent running out of RAM during a simulation study?

|

Are you using the built-in simulation procedures?

I rather suspect not from your description, but if you are doing

type of model-based simulation that procedure is designed for, it can generate

arbitrarily large datasets without consuming all the memory.

Whether OMS has to hold all the captured output in memory depends on the destination type, because some types of files can't be streamed out incrementally. Plain text can, so it won't gobble up all the memory - at least in recent versions. Generating a sav file vs a dataset makes no difference. A dataset is still disk-based. It's more like a temporary sav file. (You might also want to use zsav, which gives much better compression, although it is a bit slower than a sav file.) Jon Peck (no "h") aka Kim Senior Software Engineer, IBM [hidden email] phone: 720-342-5621 From: Ruben Geert van den Berg <[hidden email]> To: [hidden email] Date: 07/14/2015 08:22 AM Subject: [SPSSX-L] Can I circumvent running out of RAM during a simulation study? Sent by: "SPSSX(r) Discussion" <[hidden email]> Dear all, I'm working on a simulation study but SPSS seems unable to handle the amount of data involved. I'm basically simulating data, running tests and capturing the output with OMS. After running all tests, a new dataset with results is generated, edited and saved. Things ran fine for 100 iterations per condition. I first saved the raw, unedited data (immediately after OMSEND) and it turned out to be a 67MB .sav file. I kinda expected that for 1,000 iterations, I'd end up with some 670MB of data but SPSS crashed. When it did, it was consuming 4GB of RAM (I'm on Windows 8.1 with 6GB RAM). I then realized that when you save a dataset as a .sav file, it gets compressed by default. Since the dataset generated by OMS contains huge (redundant) string constants, my current guess is that the file compression accounts for the difference between the 670MB .sav file I was expecting and the 4GB RAM SPSS was consuming. The first thing that came to mind is to modify the OMS command and have it generate a .sav file instead of a dataset. Perhaps in this case it will actually generate the expected 670MB data file. However, these data are generated by one single OMS specification so SPSS may need to hold all captured output in memory anyway until it can write the compressed .sav file. Or does it write the outfile "on the fly"? And even if the .sav file can be generated, will it decompress upon opening it? And if so, could a 670MB file become too large for my 6 RAM system? In short, does anybody have an idea how these things work? And more importantly, does anybody have an idea how to get the 1,000 iterations job done on my current system anyway? The reason I'm not simply /trying/ to have OMS write a .sav file is that the syntax runs for some 5 hours during which it completely hijacks my system. I though it would be a better idea to first inform whether there's even a chance of this succeeding. -- View this message in context: http://spssx-discussion.1045642.n5.nabble.com/Can-I-circumvent-running-out-of-RAM-during-a-simulation-study-tp5730121.html Sent from the SPSSX Discussion mailing list archive at Nabble.com. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

Re: Can I circumvent running out of RAM during a simulation study?

|

Thank you Jon!

If a dataset doesn't consume more RAM than its file size when saved, then perhaps the amount of OMS captured output is not what accounts for the 4GB RAM consumed because I expect it to be around 670MB. If so, then perhaps choosing TEXT or TABTEXT as DESTINATION FORMAT won't make any difference (the CSR, version 22, doesn't mention ZSAV here). Perhaps the simulated datasets on which the output is based are the problem? I'm basically having Python generate 240 datasets (conditions in factorial design), each holding 1,000 variables (iterations within conditions). However, there's never more than 2 datasets open at once and a test run over 240 datasets each containing 100 variables completed just fine in about half an hour. Alternatively, is it conceivable that the open viewer window could consume such a vast amount of RAM? If so, perhaps suppressing all output from the viewer with an additional OMS command could improve the situation. Apart from this, are there other troubleshooting suggestions I could try to gain a little more insight into what causes the crash? Thanks a lot! Ruben |

Re: Can I circumvent running out of RAM during a simulation study?

|

A dataset will only have one or perhaps

a few cases in memory at a time - vastly less than its saved file size.

MATRIX might hold more, but still nothing like gigabytes of stuff.

I don't understand what the output, especially the Viewer output, actually is or exactly what OMS is capturing, but since the Viewer contents are entirely in memory while ordinarily case data is not, it's certainly possible that it could get quite large. There have been some improvements in memory management in OMS in recent releases, so memory usage may depend on the Statistics version. You could certainly test by just omitting the OMS code and comparing memory usage. You can get some insight by looking in the Task Manager at the various processes associated with Statistics and watching cpu and memory usage: spssengine.exe - the backend (data and computation) stats.exe - the frontend (Data Editor, Viewer, etc) startx32 - Python or R processes. Another possibility would be to run this in external mode from Python so that there would be no frontend overhead at all. That can sometimes be a very big saving. If you have installed the STATS BENCHMRK extension command, you cn capture a lot of data on memory, cpu, i/o etc corresponding to statistics observable in the Task Manager as a csv file that can be analyzed with Statistics. (It requires the Python win32 extensions.) There is also a recently added class, tSubmit, in spssaux2.py that makes it convenient to time blocks of commands run via Submit, but it does not measure memory. Jon Peck (no "h") aka Kim Senior Software Engineer, IBM [hidden email] phone: 720-342-5621 From: Ruben Geert van den Berg <[hidden email]> To: [hidden email] Date: 07/14/2015 10:11 AM Subject: Re: [SPSSX-L] Can I circumvent running out of RAM during a simulation study? Sent by: "SPSSX(r) Discussion" <[hidden email]> Thank you Jon! If a dataset doesn't consume more RAM than its file size when saved, then perhaps the amount of OMS captured output is not what accounts for the 4GB RAM consumed because I expect it to be around 670MB. If so, then perhaps choosing TEXT or TABTEXT as DESTINATION FORMAT won't make any difference (the CSR, version 22, doesn't mention ZSAV here). Perhaps the simulated datasets on which the output is based are the problem? I'm basically having Python generate 240 datasets (conditions in factorial design), each holding 1,000 variables (iterations within conditions). However, there's never more than 2 datasets open at once and a test run over 240 datasets each containing 100 variables completed just fine in about half an hour. Alternatively, is it conceivable that the open viewer window could consume such a vast amount of RAM? If so, perhaps suppressing all output from the viewer with an additional OMS command could improve the situation. Apart from this, are there other troubleshooting suggestions I could try to gain a little more insight into what causes the crash? Thanks a lot! Ruben -- View this message in context: http://spssx-discussion.1045642.n5.nabble.com/Can-I-circumvent-running-out-of-RAM-during-a-simulation-study-tp5730121p5730123.html Sent from the SPSSX Discussion mailing list archive at Nabble.com. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

Re: Can I circumvent running out of RAM during a simulation study?

|

Thanks Jon!

I'll experiment with these options and see whether that gets the job done. Best, Ruben |

Re: Can I circumvent running out of RAM during a simulation study?

|

After suppressing all output from the viewer, I succesfully ran the entire simulation study. I had OMS write all results directly to a .sav file which resulted in some 735MB, very close to the 670MB I was expecting.

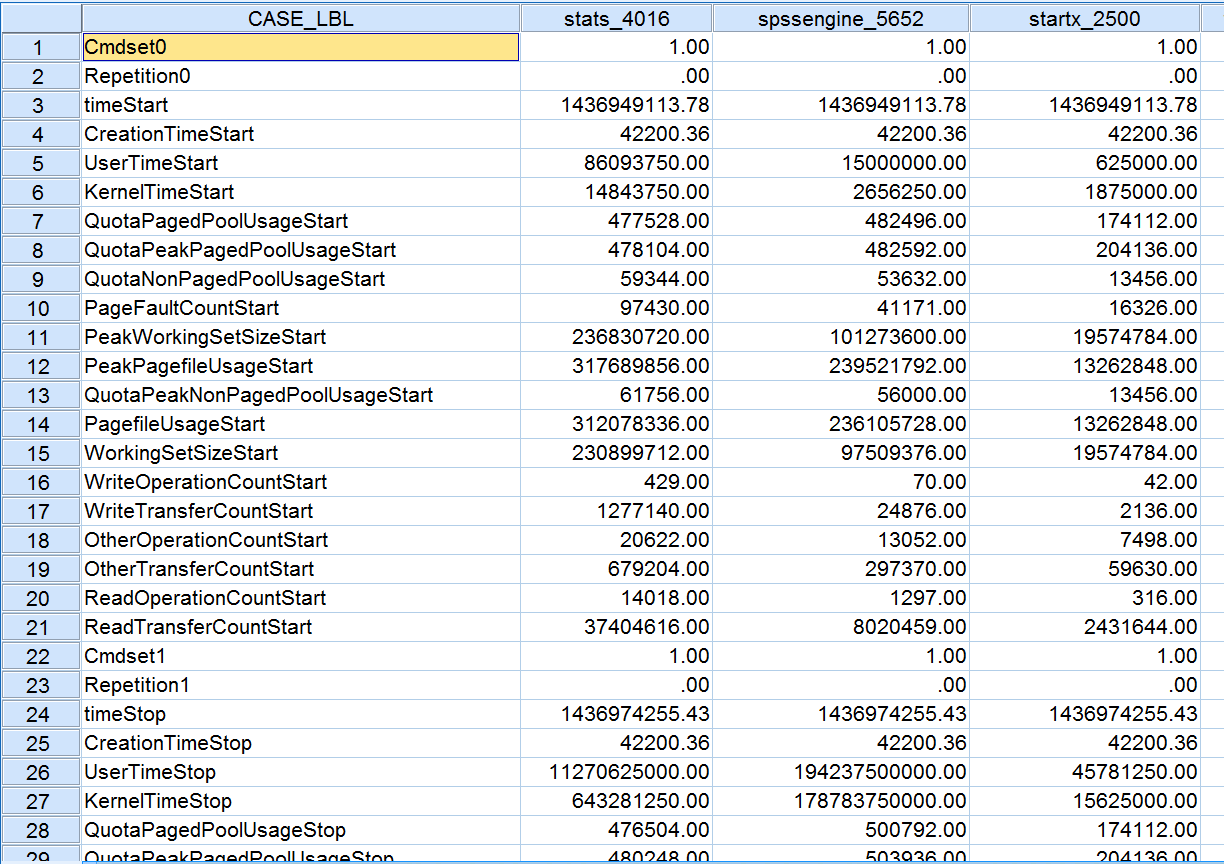

However, memory consumption still remained dangerously high, in the end exceeding some 3.3GB. This surely wasn't output contained by the viewer window because it remained basically empty. I'd really like to know why writing a 735MB .sav file can result in 3.3GB RAM consumption. I tried the STATS_BENCHMRK extension but I've no idea how to interpret the results. If anybody would be willing to take a quick look, I'd be very grateful for that. I'll attach a screenshot as well as the entire .sav file after reading in the .csv and FLIPping it. Thanks! Ruben  stats_benchmrk_1.sav stats_benchmrk_1.sav |

Re: Can I circumvent running out of RAM during a simulation study?

|

Much easier to read the numbers if you

turn on the grouping separator in Edit > Options!

It's clear that the high memory usage is in the backend process. the PeakWorkingSetSizeStop number is the most useful number. It accounts for 3.6gb, although the frontend still peaks at almost 500MB. In a system at rest, the backend would be using 130-180MB, and the frontend < 300MB. The OMS intermediate sav file contents are not compressed, so that would account for the difference between the memory usage and the sav file size. But what are you doing that you need to capture this output with OMS. Since OMS operates on the output that would appear in the Viewer, that volume is really unusual. Is OMS really the only way to capture it? External mode would eliminate the frontend memory usage and give you some breathing room. The most memory I have ever used in a Statistics session is 14GB on a job that ran for 8+ hours. I have 16GB of RAM. The system did survive this stress :-) Jon Peck (no "h") aka Kim Senior Software Engineer, IBM [hidden email] phone: 720-342-5621 From: Ruben Geert van den Berg <[hidden email]> To: [hidden email] Date: 07/15/2015 11:11 AM Subject: Re: [SPSSX-L] Can I circumvent running out of RAM during a simulation study? Sent by: "SPSSX(r) Discussion" <[hidden email]> After suppressing all output from the viewer, I succesfully ran the entire simulation study. I had OMS write all results directly to a .sav file which resulted in some 735MB, very close to the 670MB I was expecting. However, memory consumption still remained dangerously high, in the end exceeding some 3.3GB. This surely wasn't output contained by the viewer window because it remained basically empty. I'd really like to know why writing a 735MB .sav file can result in 3.3GB RAM consumption. I tried the STATS_BENCHMRK extension but I've no idea how to interpret the results. If anybody would be willing to take a quick look, I'd be very grateful for that. I'll attach a screenshot as well as the entire .sav file after reading in the .csv and FLIPping it. Thanks! Ruben <http://spssx-discussion.1045642.n5.nabble.com/file/n5730143/stats_benchmrk_1.png> stats_benchmrk_1.sav <http://spssx-discussion.1045642.n5.nabble.com/file/n5730143/stats_benchmrk_1.sav> -- View this message in context: http://spssx-discussion.1045642.n5.nabble.com/Can-I-circumvent-running-out-of-RAM-during-a-simulation-study-tp5730121p5730143.html Sent from the SPSSX Discussion mailing list archive at Nabble.com. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

Re: Can I circumvent running out of RAM during a simulation study?

|

Administrator

|

Jon, can you be more precise about where to find the grouping separator setting? I'm not seeing it. Is this something new in v23? (I'm still on v22). Thanks for clarifying!

--

Bruce Weaver bweaver@lakeheadu.ca http://sites.google.com/a/lakeheadu.ca/bweaver/ "When all else fails, RTFM." PLEASE NOTE THE FOLLOWING: 1. My Hotmail account is not monitored regularly. To send me an e-mail, please use the address shown above. 2. The SPSSX Discussion forum on Nabble is no longer linked to the SPSSX-L listserv administered by UGA (https://listserv.uga.edu/). |

Re: Can I circumvent running out of RAM during a simulation study?

|

It's not new in 23 - it was added in V19.

Edit > Options > General: "Apply locale's digit grouping

format to numeric variables" or

SET DIGITGROUPING = NO or YES. Jon Peck (no "h") aka Kim Senior Software Engineer, IBM [hidden email] phone: 720-342-5621 From: Bruce Weaver <[hidden email]> To: [hidden email] Date: 07/15/2015 12:30 PM Subject: Re: [SPSSX-L] Can I circumvent running out of RAM during a simulation study? Sent by: "SPSSX(r) Discussion" <[hidden email]> Jon, can you be more precise about where to find the grouping separator setting? I'm not seeing it. Is this something new in v23? (I'm still on v22). Thanks for clarifying! Jon K Peck wrote > Much easier to read the numbers if you turn on the grouping separator in > Edit > Options! ----- -- Bruce Weaver [hidden email] http://sites.google.com/a/lakeheadu.ca/bweaver/ "When all else fails, RTFM." NOTE: My Hotmail account is not monitored regularly. To send me an e-mail, please use the address shown above. -- View this message in context: http://spssx-discussion.1045642.n5.nabble.com/Can-I-circumvent-running-out-of-RAM-during-a-simulation-study-tp5730121p5730145.html Sent from the SPSSX Discussion mailing list archive at Nabble.com. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

Re: Can I circumvent running out of RAM during a simulation study?

|

In reply to this post by Jon K Peck

@Jon: could I perhaps borrow your computer for a couple of days? :-)

But anway, great tip about the digitgrouping! That is show digitgrouping. set digitgrouping yes. I only knew digit grouping from either table templates or chart templates or both (not sure). "But what are you doing that you need to capture this output with OMS?" Well, if you really want to know: I'm basically drawing 320,000 samples according to a 8 * 4 * 5 * 2 * 10 full factorial design, I delete some values, run MULTIPLE IMPUTATION and T TEST and capture the main t-test results (p values and so on). It seemed obvious to me to use OMS for this but perhaps a faster alternative would have been spssaux.CreateXMLOutput() because the relevant output is some 30MB as opposed to 730MB of raw output. The raw OMS output also has long (redundant) string constants which - I'd expect - take up a ton of disk space when left uncompressed. However, the OMS way was relatively easy to manage. If you'd like to look into it, I could perhaps send you the syntax (not really that much). Lots of thanks once again! Ruben |

Re: Can I circumvent running out of RAM during a simulation study?

|

Memory is pretty cheap these days, but

I'm not lending any of it out :-)

spssaux.CreateXMLOutput() won't speed help here, because it is using OMS in the same way you would directly in syntax, except that it would be writing the t test results into the in-memory workspace. However, you might be able to simplify the whole operation. The t tests depend only on the N's, means, and std deviations, so you might use AGGREGATE to compute all those at once, breaking on the design parameters. Then calculate the test with a simple COMPUTE (depending on whether you assume equal variance or not), and the significance level if needed. Then just save those output variables. The multiple imputation might make some difficulties, though, if you need to do the combining of the imputations with Rubin's rules. Although OMS doesn't support it, zsav format is likely to reduce the file size a lot compared to sav. I have seen typical ratios of 1/5 the size, although it may be a bit slower to process. Jon Peck (no "h") aka Kim Senior Software Engineer, IBM [hidden email] phone: 720-342-5621 From: Ruben Geert van den Berg <[hidden email]> To: [hidden email] Date: 07/15/2015 01:44 PM Subject: Re: [SPSSX-L] Can I circumvent running out of RAM during a simulation study? Sent by: "SPSSX(r) Discussion" <[hidden email]> @Jon: could I perhaps borrow your computer for a couple of days? :-) But anway, great tip about the digitgrouping! That is show digitgrouping. set digitgrouping yes. I only knew digit grouping from either table templates or chart templates or both (not sure). "But what are you doing that you need to capture this output with OMS?" Well, if you /really/ want to know: I'm basically drawing 320,000 samples according to a 8 * 4 * 5 * 2 * 10 full factorial design, I delete some values, run MULTIPLE IMPUTATION and T TEST and capture the main t-test results (p values and so on). It seemed obvious to me to use OMS for this but perhaps a faster alternative would have been spssaux.CreateXMLOutput() because the relevant output is some 30MB as opposed to 730MB of raw output. The raw OMS output also has long (redundant) string constants which - I'd expect - take up a ton of disk space when left uncompressed. However, the OMS way was relatively easy to manage. If you'd like to look into it, I could perhaps send you the syntax (not really that much). Lots of thanks once again! Ruben -- View this message in context: http://spssx-discussion.1045642.n5.nabble.com/Can-I-circumvent-running-out-of-RAM-during-a-simulation-study-tp5730121p5730147.html Sent from the SPSSX Discussion mailing list archive at Nabble.com. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

«

Return to SPSSX Discussion

|

1 view|%1 views

| Free forum by Nabble | Edit this page |