Fleiss kappa

|

Hello,

I am trying use Fleiss kappa to determine the interrater agreement between 5 participants, but I am new to SPSS and struggling. Do I need a macro file to do this? (If so, how do I find/use this?) My research requires 5 participants to answer 'yes', 'no', or 'unsure' on 7 questions for one image, and there are 30 images in total. Can I work out kappa for the data as a whole, or am I best splitting it and working out the kappa for each image? I am really stuck on how to work this out and I am unable to get help from my tutor for this! If anyone could give any advice, wants more information, or could talk me through how to do this, I would greatly appreciate it! Thank you, Amy |

|

Have you looked at the STATS FLEISS KAPPA

extension command available from the SPSS Community website (www.ibm.com/developerworks/spssdevcentral)

in the Extension Commands collection or, in Statistics 22 or later, via

the Utilities menu?

Jon Peck (no "h") aka Kim Senior Software Engineer, IBM [hidden email] phone: 720-342-5621 From: Vickers1994 <[hidden email]> To: [hidden email] Date: 03/23/2015 01:25 PM Subject: [SPSSX-L] Fleiss kappa Sent by: "SPSSX(r) Discussion" <[hidden email]> Hello, I am trying use Fleiss kappa to determine the interrater agreement between 5 participants, but I am new to SPSS and struggling. Do I need a macro file to do this? (If so, how do I find/use this?) My research requires 5 participants to answer 'yes', 'no', or 'unsure' on 7 questions for one image, and there are 30 images in total. Can I work out kappa for the data as a whole, or am I best splitting it and working out the kappa for each image? I am really stuck on how to work this out and I am unable to get help from my tutor for this! If anyone could give any advice, wants more information, or could talk me through how to do this, I would greatly appreciate it! Thank you, Amy -- View this message in context: http://spssx-discussion.1045642.n5.nabble.com/Fleiss-kappa-tp5729024.html Sent from the SPSSX Discussion mailing list archive at Nabble.com. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

|

In reply to this post by Vickers1994

Amy,

I think it's best to derive a kappa for each image since there are likely to be differences among images relative to difficulty with agreement. One of the important functions of an inter-rater study is to uncover sources of difficulty for the judges so that they might be addressed in future trainings. You can also do an overall kappa, but it would be over five raters and 210 items/subjects; so the likelihood that a small kappa would be significant is heightened. On the other hand, it would provide the reader with a global index of agreement, and there is some value in that. My recommendation is overall followed by individual image kappas. When you do the kappa, look at the 95% CI, if any of them cross 0, then regardless of the significance level, there isn't going to be much practical meaning because agreement below the expected proportion is a viable true value. Brian Brian Dates, M.A. Director of Evaluation and Research | Evaluation & Research | Southwest Counseling Solutions Southwest Solutions 1700 Waterman, Detroit, MI 48209 313-841-8900 (x7442) office | 313-849-2702 fax [hidden email] | www.swsol.org -----Original Message----- From: SPSSX(r) Discussion [mailto:[hidden email]] On Behalf Of Vickers1994 Sent: Monday, March 23, 2015 3:13 PM To: [hidden email] Subject: Fleiss kappa Hello, I am trying use Fleiss kappa to determine the interrater agreement between 5 participants, but I am new to SPSS and struggling. Do I need a macro file to do this? (If so, how do I find/use this?) My research requires 5 participants to answer 'yes', 'no', or 'unsure' on 7 questions for one image, and there are 30 images in total. Can I work out kappa for the data as a whole, or am I best splitting it and working out the kappa for each image? I am really stuck on how to work this out and I am unable to get help from my tutor for this! If anyone could give any advice, wants more information, or could talk me through how to do this, I would greatly appreciate it! Thank you, Amy -- View this message in context: http://spssx-discussion.1045642.n5.nabble.com/Fleiss-kappa-tp5729024.html Sent from the SPSSX Discussion mailing list archive at Nabble.com. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

|

This post was updated on .

Okay thank you, I will calculate the kappa scores for each image individually - I may also work out the total to add extra information.

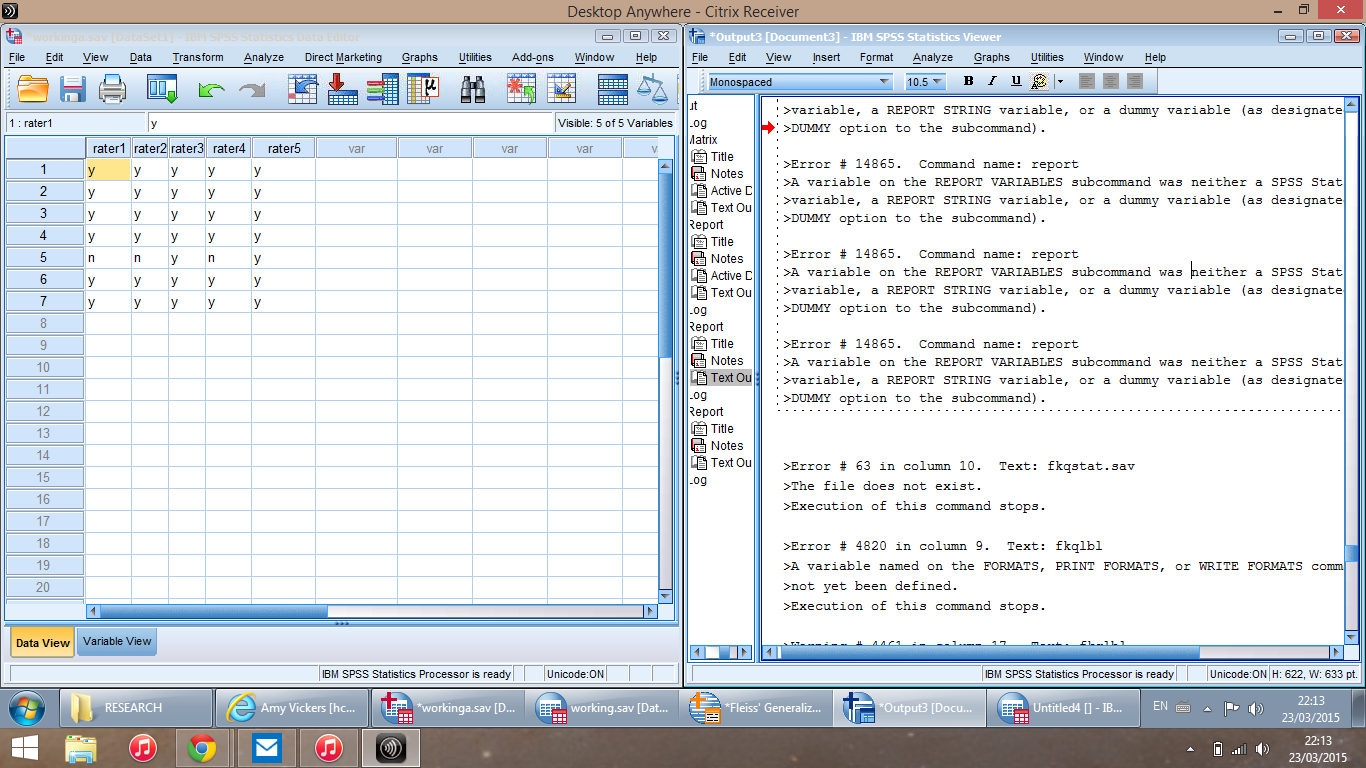

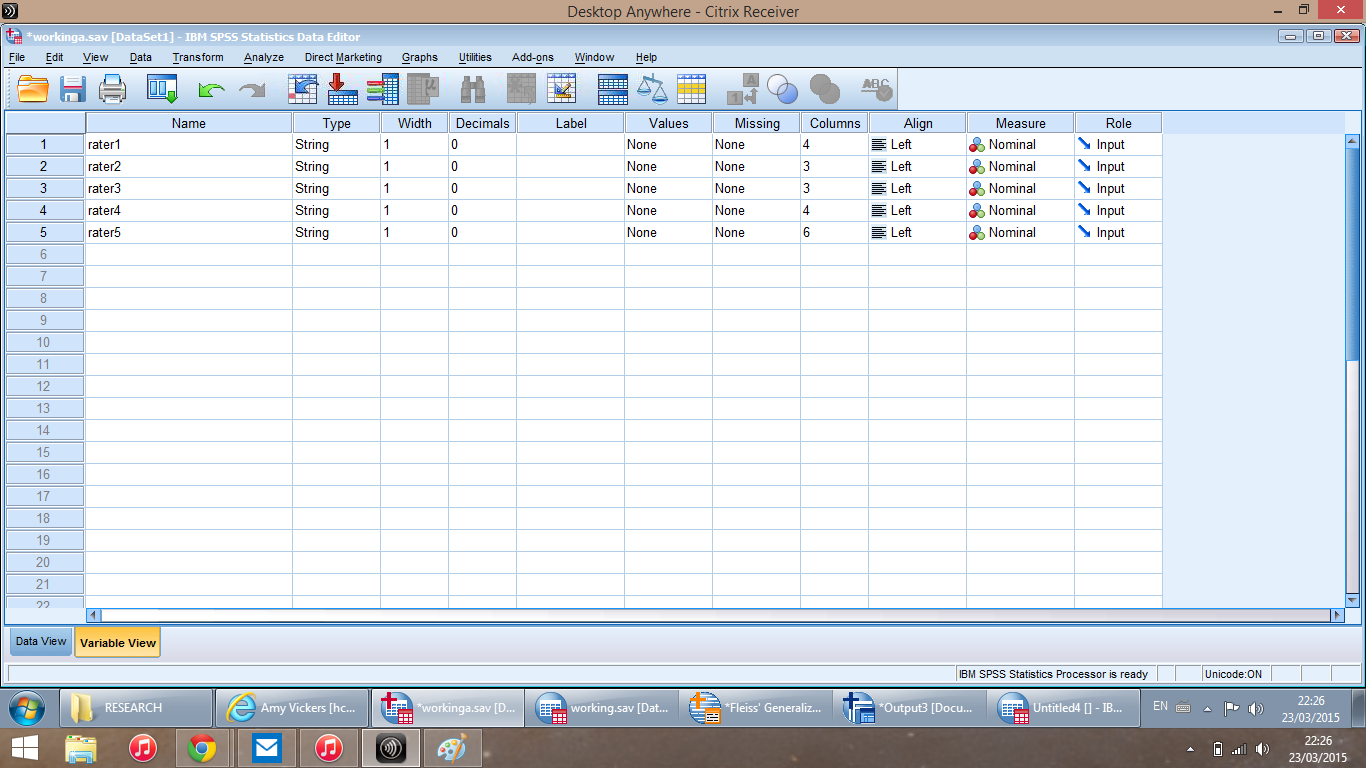

I received the macro file and am trying to use it, however it keeps bringing up error messages. I have printscreened the .sav file I am trying to work out the kappa value of (this is on the left side of the screen) and on the right is a section of the error message this is bringing up.  (The text of the main error it is bringing up is as follows: Error # 14865. Command name: report >A variable on the REPORT VARIABLES subcommand was neither a SPSS Statistics >variable, a REPORT STRING variable, or a dummy variable (as designated by the >DUMMY option to the subcommand)). I have also attached an image of the 'variable view' part of the .sav file.  Can you see where I am going wrong? Thanks **EDIT** After changing the input from letters to numbers I have successfully been able to produce a kappa score. Thanks! |

|

In reply to this post by Vickers1994

I don't like the statement of the problem. Is the experiment supposed

=====================

To manage your subscription to SPSSX-L, send a message to

[hidden email] (not to SPSSX-L), with no body text except the

command. To leave the list, send the command

SIGNOFF SPSSX-L

For a list of commands to manage subscriptions, send the command

INFO REFCARD

to conclude something about the images, or something about the raters? The direct application that I see for Fleiss's kappa produces 210 kappas (7x30 or 210, multiplying questions by images). At it assumes that 'unsure' is *not* a response falling between 'yes' and 'no' -- That seems like a waste of information, which is never desirable, but the N=5 is not encouragement for waste. If the continuous scores (y, unsure, n) were presented in one large ANOVA, images by items by raters, would many of the effects be interesting? Are the 7 questions supposed to tap the same dimension? Is an average meaningful? Would the 7 questions yield one or two continuous summary scores for an image, in any fashion? [Again, is this about Images or Raters?] -- Rich Ulrich > Date: Mon, 23 Mar 2015 12:12:36 -0700 > From: [hidden email] > Subject: Fleiss kappa > To: [hidden email] > > Hello, > > I am trying use Fleiss kappa to determine the interrater agreement between 5 > participants, but I am new to SPSS and struggling. > > Do I need a macro file to do this? (If so, how do I find/use this?) > > My research requires 5 participants to answer 'yes', 'no', or 'unsure' on 7 > questions for one image, and there are 30 images in total. > > Can I work out kappa for the data as a whole, or am I best splitting it and > working out the kappa for each image? > > I am really stuck on how to work this out and I am unable to get help from > my tutor for this! > > If anyone could give any advice, wants more information, or could talk me > through how to do this, I would greatly appreciate it! > > Thank you, > > Amy > > > > -- > View this message in context: http://spssx-discussion.1045642.n5.nabble.com/Fleiss-kappa-tp5729024.html > Sent from the SPSSX Discussion mailing list archive at Nabble.com. > > ===================== > To manage your subscription to SPSSX-L, send a message to > [hidden email] (not to SPSSX-L), with no body text except the > command. To leave the list, send the command > SIGNOFF SPSSX-L > For a list of commands to manage subscriptions, send the command > INFO REFCARD |

It is looking at both. I will give you some context - the images I refer to are x-rays, and I am asking participants whether they can see the anatomy which should be visible, which forms the 7 questions - for example 'can you see the talus?' would be one question. My primary aim is to establish how many of the x-rays are diagnostically acceptable, but I am also looking at the interrater agreement between the participants. The latter is what I am using SPSS to establish. Yes I see your point, I am treating the data as nominal but there is actually a relationship between the three. I think there are only 3 'unsure' responses throughout all results, so I am not sure presenting the scores as you suggest would show any interesting relationships. How would you suggests taking this relationship into account? A weighted kappa? Thanks, Amy |

|

Okay. Well, I would tackle this in a different order. Since the "primary aim"

=====================

To manage your subscription to SPSSX-L, send a message to

[hidden email] (not to SPSSX-L), with no body text except the

command. To leave the list, send the command

SIGNOFF SPSSX-L

For a list of commands to manage subscriptions, send the command

INFO REFCARD

is to establish how many images are acceptable, I would score up the images for acceptability. Do all seven answers need to be Yes? If there are one or two answers of No, does it matter which ones they are? Is the talus easier/ harder to spot that something else? -- If certain answers are more likely to be No, then it makes nonsense of an attempt to computer a useful "reliability" number that computes a correlation across those items. But it does make sense to count the total number of Yes's. (And, "not sure" counts as "not Yes", so the total counts can be reported without ambiguity.) If the scores are not mostly 7, then it can be simple to look at the Pearson r's between raters: perhaps, while looking at paired t-tests for their scores. If most scores *are* 7, then you find yourself with minimal variation in the whole sample: That can be a nice result all by itself, but low variation spawns low correlations. Since any correlation, for reliability or otherwise, depends on the variability of the sample, it is important to note how varying the sample is. In particular, how many of the 30 images get scores of perfect 7s by all raters? Were the images selected to represent a range of difficulty? -- If Yes, that can be a good thing, for getting a higher correlation; but it might falsely suggest something bad when you look at details because it will inflate the number of disagreements, compared to an examination of only "good" charts. In either case, it *must* be noted in the narrative. How varying are the raters? Do they each down-rate the same number of images or are one or two of the five more/less easily satisfied? What I have described is mainly simple tabulations. I have asked questions that frame the potential usefulness of other sorts of followups. For instance, if 25 of the images were perfect by everyone, you are left with trying to generalize from a practical set of contrasts among N=5 images, not 30. -- Rich Ulrich > Date: Mon, 23 Mar 2015 17:04:26 -0700 > From: [hidden email] > Subject: Re: Fleiss kappa > To: [hidden email] > > Rich Ulrich wrote > > Is the experiment supposed to conclude something about the images, or > > something about the raters? > > > Rich Ulrich wrote > > Are the 7 questions supposed to tap the same dimension? Is an average > > meaningful? Would the 7 questions yield one or two continuous summary > > scores for an image, in any fashion? [Again, is this about Images or > > Raters?] > > It is looking at both. I will give you some context - the images I refer to > are x-rays, and I am asking participants whether they can see the anatomy > which should be visible, which forms the 7 questions - for example 'can you > see the talus?' would be one question. My primary aim is to establish how > many of the x-rays are diagnostically acceptable, but I am also looking at > the interrater agreement between the participants. The latter is what I am > using SPSS to establish. > > > Rich Ulrich wrote > > The direct application that I see for Fleiss's kappa produces 210 kappas > > (7x30 or 210, multiplying questions by images). At it assumes that > > 'unsure' is *not* a response falling between 'yes' and 'no' -- That seems > > like a waste of information, which is never desirable, but the N=5 is not > > encouragement for waste. > > > > If the continuous scores (y, unsure, n) were presented in one large ANOVA, > > images by items by raters, would many of the effects be interesting? > > Yes I see your point, I am treating the data as nominal but there is > actually a relationship between the three. I think there are only 3 'unsure' > responses throughout all results, so I am not sure presenting the scores as > you suggest would show any interesting relationships. > How would you suggests taking this relationship into account? A weighted > kappa? > > Thanks, > |

«

Return to SPSSX Discussion

|

1 view|%1 views

| Free forum by Nabble | Edit this page |