interpretation of beta coefficent in quadratic regression

|

Hello,

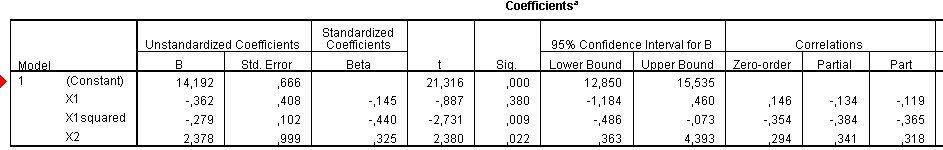

I'm have a multiple Regression with a quadratic relationship. I use SPSS. I have three independent variables in my model x1;x1² and x2. x1² is the result of x1 *x1. x1² and x2 are significant terms and the whole model is significant, too. All is good. But I´m not sure how I should interpret the standardized betas of the indepedent variables. Which independent variable have the biggest influence of the dependent variable. Beta for x1 = -0,145 Beta for x1² = -0,440 Beta for X2 = 0,325 Must I add up the effects of X1 and X1² and compare with X2. Or must i compare them seperatly? I'm excuse me for my bad English, but I hope you understand my problem and can help me. Thank you very much. |

Re: interpretation of beta coefficent in quadratic regression

|

Administrator

|

If your library has the Aiken & West book "Multiple Regression: Testing and Interpreting Interactions", it would be well worth your time looking at it.

One way to think of the quadratic term for x1 is as the interaction of x1 with itself. As with any interaction, then, the effect of x1 depends on the value of x1. In your model, the "effect of x1" is the slope between x1 and Y, controlling for x2. The presence of x1*x1 in the model means the value of that (instantaneous) slope depends on the value of x1. The B-coefficient for x1 gives you the instantaneous slope when x1 = 0. The B-coefficient for x1^2 gives you the change in that instantaneous slope for a one-unit increase in x1. (The standardized coefficients give the same info, but for them setting a variable to 0 means setting it to its mean, and a one unit increase is a one SD increase.) To help visualize the model, I would compute fitted values of Y at selected combinations of the explanatory variables, and then plot them. If you use UNIANOVA rather than REGRESSION, you can do this by including multiple EMMEANS sub-commands. It would look something like this: UNIANOVA y WITH x1 x2 /METHOD=SSTYPE(3) /INTERCEPT=INCLUDE /EMMEANS=TABLES(OVERALL) WITH(x1=50 x2=10) /EMMEANS=TABLES(OVERALL) WITH(x1=50 x2=30) /EMMEANS=TABLES(OVERALL) WITH(x1=50 x2=50) /EMMEANS=TABLES(OVERALL) WITH(x1=150 x2=10) /EMMEANS=TABLES(OVERALL) WITH(x1=150 x2=30) /EMMEANS=TABLES(OVERALL) WITH(x1=150 x2=50) /EMMEANS=TABLES(OVERALL) WITH(x1=250 x2=10) /EMMEANS=TABLES(OVERALL) WITH(x1=250 x2=30) /EMMEANS=TABLES(OVERALL) WITH(x1=250 x2=50) /EMMEANS=TABLES(OVERALL) WITH(x1=350 x2=10) /EMMEANS=TABLES(OVERALL) WITH(x1=350 x2=30) /EMMEANS=TABLES(OVERALL) WITH(x1=350 x2=50) /EMMEANS=TABLES(OVERALL) WITH(x1=450 x2=10) /EMMEANS=TABLES(OVERALL) WITH(x1=450 x2=30) /EMMEANS=TABLES(OVERALL) WITH(x1=450 x2=50) /DESIGN= x1 x1*x1 x2 . If you want to avoid picking through all those tables in the output viewer, you can use OMS to send them to a dataset which you can then use for making a table or plot of the fitted values. HTH.

--

Bruce Weaver bweaver@lakeheadu.ca http://sites.google.com/a/lakeheadu.ca/bweaver/ "When all else fails, RTFM." PLEASE NOTE THE FOLLOWING: 1. My Hotmail account is not monitored regularly. To send me an e-mail, please use the address shown above. 2. The SPSSX Discussion forum on Nabble is no longer linked to the SPSSX-L listserv administered by UGA (https://listserv.uga.edu/). |

Re: interpretation of beta coefficent in quadratic regression

|

In reply to this post by 131819

First of all I would not look at the beta to determine the size of the influence. Standardized regression coefficients are fallible in such regard.

Dr. Paul R. Swank, Professor and Director of Research Children's Learning Institute University of Texas Health Science Center-Houston -----Original Message----- From: SPSSX(r) Discussion [mailto:[hidden email]] On Behalf Of 131819 Sent: Tuesday, May 24, 2011 9:37 AM To: [hidden email] Subject: interpretation of beta coefficent in quadratic regression Hello, I'm have a multiple Regression with a quadratic relationship. I use SPSS. I have three independent variables in my model x1;x1² and x2. x1² is the result of x1 *x1. x1² and x2 are significant terms and the whole model is significant, too. All is good. But I´m not sure how I should interpret the standardized betas of the indepedent variables. Which independent variable have the biggest influence of the dependent variable. Beta for x1 = -0,145 Beta for x1² = -0,440 Beta for X2 = 0,325 Must I add up the effects of X1 and X1² and compare with X2. Or must i compare them seperatly? I'm excuse me for my bad English, but I hope you understand my problem and can help me. Thank you very much. -- View this message in context: http://spssx-discussion.1045642.n5.nabble.com/interpretation-of-beta-coefficent-in-quadratic-regression-tp4422260p4422260.html Sent from the SPSSX Discussion mailing list archive at Nabble.com. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

Re: interpretation of beta coefficent in quadratic regression

|

Administrator

|

I agree with Paul. John Fox certainly urges caution in his book on Generalized Linear Models. Here are some notes I wrote for myself after reading Fox's comments.

--- Beginning of notes --- In his book "Applied Regression Analysis and Generalized Linear Models" (2008, Sage), John Fox is very cautious about the use of standardized regression coefficients. He gives this interesting example. When two variables are measured on the same scale (e.g.,years of education, and years of employment), then relative impact of the two can be compared directly. But suppose those two variables differ substantially in the amount of spread. In that case, comparison of the standardized regression coefficients would likely yield a very different story than comparison of the raw regression coefficients. Fox then says: "If expressing coefficients relative to a measure of spread potentially distorts their comparison when two explanatory variables are commensurable [i.e., measured on the same scale], then why should the procedure magically allow us to compare coefficients [for variables] that are measured in different units?" (p. 95) Good question! A page later, Fox adds the following: "A common misuse of standardized coefficients is to employ them to make comparisons of the effects of the same explanatory variable in two or more samples drawn from different populations. If the explanatory variable in question has different spreads in these samples, then spurious differences between coefficients may result, even when _unstandardized_ coefficients are similar; on the other hand, differences in unstandardized coefficients can be masked by compensating differences in dispersion." (p. 96) And finally, this comment on whether or not Y has to be standardized: "The usual practice standardizes the response variable as well, but this is an inessential element of the computation of standardized coefficients, because the _relative_ size of the slope coefficients does not change when Y is rescaled." (p. 95) --- End of notes ---

--

Bruce Weaver bweaver@lakeheadu.ca http://sites.google.com/a/lakeheadu.ca/bweaver/ "When all else fails, RTFM." PLEASE NOTE THE FOLLOWING: 1. My Hotmail account is not monitored regularly. To send me an e-mail, please use the address shown above. 2. The SPSSX Discussion forum on Nabble is no longer linked to the SPSSX-L listserv administered by UGA (https://listserv.uga.edu/). |

|

In reply to this post by 131819

Thank you very much for your help.

I thought it would be possible to use the partial correlations to identify the most powerful independent variabel. What do you mean? Is this approach useful or not?

|

Re: interpretation of beta coefficent in quadratic regression

|

In reply to this post by 131819

One of the first complexities for looking at standardized beta

is the question of "How do *you* want to regard the impact of intercorrelations?" - I will interject here, before addressing the question itself, than my own main use of beta has been to check for 'suppressor variables', which are (usually) very serious trouble for interpretations. - If any beta has the opposite sign if its zero-order correlation, that is a sign of suppression taking place. If betas are not similar to the zero-order correlations with outcome, that is a sign that intercorrelations are indeed affecting the loadings, up or down. - When X and X-squared are both used, and x is not zero-centered (or near it), then there can be a *very* high correlation between those two terms. Then, either the two terms account for the *same* part of the outcome, and have coefficients that are smaller than either would be by itself; or the "effective predictor using x" is some version of c*x-x^2... a suppressor relationship. So, if you want to look at the overall impact of x, you need to construct one variable for x: use the proportions from the raw b coefficients to make a composite variable for x so you can look at the composite, if you are intending to examine the betas. A separate way to consider the contributions is to look at the "variance contributed at each step", looking at the entry of X1 and X1^2 (in your problem) as the two variables entered at the last step, and comparing the improvement in R^2 to the similar improvement for entering X2 at the last step. For interpretation, it is still essential to keep in mind that these contributions do depend on the *range* of X1 and X2 that are actually present in the sample in the analysis. -- Rich Ulrich ---------------------------------------- > Date: Tue, 24 May 2011 07:37:01 -0700 > From: [hidden email] > Subject: interpretation of beta coefficent in quadratic regression > To: [hidden email] > > Hello, > I'm have a multiple Regression with a quadratic relationship. I use SPSS. > > I have three independent variables in my model x1;x1² and x2. x1² is the > result of x1 *x1. > > x1² and x2 are significant terms and the whole model is significant, too. > All is good. > > But I´m not sure how I should interpret the standardized betas of the > indepedent variables. > Which independent variable have the biggest influence of the dependent > variable. ===================== To manage your subscription to SPSSX-L, send a message to [hidden email] (not to SPSSX-L), with no body text except the command. To leave the list, send the command SIGNOFF SPSSX-L For a list of commands to manage subscriptions, send the command INFO REFCARD |

«

Return to SPSSX Discussion

|

1 view|%1 views

| Free forum by Nabble | Edit this page |